Welcome back to the LuaJIT series: After the 1st part is behind us, where we introduced the VM & execution model. Now we’ll get a source-code tour & see the inner workings of the JIT-compiler of LuaJIT.

Most of my work here is based on threads from the LuaJIT mailing list and this great talk by Vyacheslav Egorov, which provides an overview of interesting things in LuaJIT on a higher level. In this post, I’ll try to dive a bit deeper by providing source code snippets/references.

Introduction: What is JIT

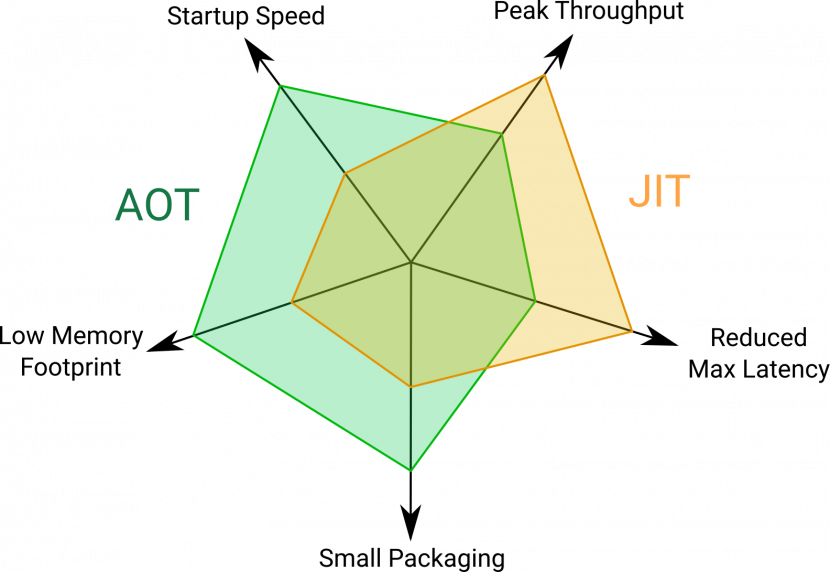

As opposed to AOT(Ahead of Time) compilation which is more traditional, where a source code turns into a bytecode ahead of time. In JIT compilation, the process is a bit different.

Just-in-time compilation is a way of executing computer code that involves compilation at run-time rather than before execution. In this process, bytecode is being translated to machine code, which is then executed directly by the CPU(instead of the Lua VM). This enables better performance/speed because in JIT, we’re cutting off an extra layer of indirection(the Lua VM interpreter).

Credit: https://shuracore.com/compiler-design/jit-and-aot/

Modes of LuaJIT

Lua has two states: interpretation mode, and JIT mode:

- Interpretation mode: Described in part 1 of this series.

- JIT mode: In JIT mode, the ‘tracing JIT’ is enabled. As opposed to a ‘method JIT’(which is more traditional) that works by discovering and optimizing hot methods - A tracing JIT discovers hot traces/paths of execution and turns them into machine code.

The Tracing JIT of LuaJIT has multiple stages, which we will cover below.

Steps of JIT

The JIT can be divided into several steps:

- Step 1: Count the ‘heat codes’

- Step 2: Record ‘hot’ code-paths, generate IR code.

- Step 3: ‘Generation phase’, generation of machine instructions.

- Step 4: Execute the newly generated machine instructions.

Buckle up, it’s going to be a wild ride (⌐ ͡■ ͜ʖ ͡■)

Step 1: Profiling/Counting the ‘heat’ codes

In the first step, the Lua VM maps the execution flow & detects potential areas to trace/compile later.

LuaJIT keeps track of the ‘hotness’ of instructions/potential traces in a hashtable. This hashtable is saved in the GG_State→hotcount[] struct member. It uses the hotcount_{get/set} macro to access a hotcount, and in the VM assembly code, a hotcount is accessed by referencing the GG_DISP2HOT macro, this macro measures the offset from the beginning of the state object to the the beginning of hotcount[] hashtable.

The general assumption of how LuaJIT is designed is that: All programs spends most of their time in loops. So the way it detects potential areas to trace and improve performance is by inserting hooks into the loop/jmp and call instructions. If the hotcount reaches beyond JIT_P_hotloop(=56, default val) this part of the code will be considered as ‘hot’.

This hooking proccess is done using the hotloop and hotcall macros. For the purpose of this post, we’ll focus on hotloop:

|// Decrement hashed hotcount and trigger trace recorder if zero.

|.macro hotloop, reg

// calculating hotcount[] hashtable idx

| mov reg, PC

| shr reg, 1

| and reg, HOTCOUNT_PCMASK

// decrementing hotcounter

| sub word [DISPATCH+reg+GG_DISP2HOT], HOTCOUNT_LOOP

// if reached below 0, mark this as a hot loop/candidate for tracing

| jb ->vm_hotloop

|.endmacroThis macro decrements the hotcount and checks if it was under-flowed(reached below 0). If it is, then this part of the code considered to be as a potential candidate for JITing. The example below shows how this hook is embedded into a BC_FORL loop instruction:

case BC_FORL:

|.if JIT

| hotloop RB

|.endif

In the last instruction of the macro(jb ->vm_hotloop) the tracing starts:

|->vm_hotloop: // Hot loop counter underflow.

|.if JIT

// Pop the GCfuncL from the stack and read pc

| mov LFUNC:RB, [BASE-8]

| mov RB, LFUNC:RB->pc

// Using `GCProto->framesize` to calculate the first free slot in the stack

| movzx RD, byte [RB+PC2PROTO(framesize)]

| lea RD, [BASE+RD*8] // same as `curr_topL` macro

// Preparing the `lua_State` fields

| mov L:RB, SAVE_L

| mov L:RB->base, BASE

| mov L:RB->top, RD // new top

// Set argument registers before calling `lj_trace_hot`

| mov FCARG2, PC

| lea FCARG1, [DISPATCH+GG_DISP2J]

| mov aword [DISPATCH+DISPATCH_J(L)], L:RBa

// Save PC and call `lj_trace_hot()`

| mov SAVE_PC, PC

| call extern lj_trace_hot@8 // lj_trace_hot(jit_State *J, const BCIns *pc)

| jmp <3 // jump backwards(<) to label 3

|.endifTowards the end, there’s a call to lj_trace_hot, which is the entrypoint for the ‘recording phase’.

Step 2: Record ‘hot’ code-paths, generate IR code

Recording Traces

In the recording phase, all the dynamic dispatchers/opcode handlers(from idx 0 to 89) are replaced with a callback to lj_vm_record:

src/lj_dispatch.c#L157-L163

/* The recording dispatch also checks for hooks. */

ASMFunction f = (mode & DISPMODE_PROF) ? lj_vm_profhook :

(mode & DISPMODE_REC) ? lj_vm_record : lj_vm_inshook;

uint32_t i;

for (i = 0; i < GG_LEN_SDISP; i++)

disp[i] = f;

}Note: If you recall from the previous blogpost where I mentioned briefly about static and dynamic dispatchers, this is the time to talk about the differences between the two :^) The

dispatch[]array is composed of two parts: the 1st part is the dynamic dispatchers, and the 2nd part is the static dispatchers. Essentially, both of them contain the same data: pointers to opcode handlers. The only difference is in the usage of them/their purpose: the dynamic dispatchers are replaced during the recording phase for hooking purposes, and the static dispatchers are never changed, they are saved as a backup to continue normal execution(example below).

What this callback is doing is to trigger a call to lj_dispatch_ins, which performs a couple of sanity checks, followed by a jump to the real opcode handler(jmp aword [DISPATCH+OP*8+GG_DISP2STATIC]) to continue normal execution:

|->vm_record: // Dispatch target for recording phase.

// jumping to 1:

...

|1:

| mov L:RB, SAVE_L

| mov L:RB->base, BASE

| mov FCARG2, PC // Caveat: FCARG2 == BASE

| mov FCARG1, L:RB

| // SAVE_PC must hold the _previous_ PC. The callee updates it with PC.

| call extern lj_dispatch_ins@8 // (lua_State *L, const BCIns *pc)

| mov BASE, L:RB->base

| movzx RA, PC_RA

| movzx OP, PC_OP

| movzx RD, PC_RD

|.if X64

| jmp aword [DISPATCH+OP*8+GG_DISP2STATIC] // Re-dispatch to static ins.

|.else

| jmp aword [DISPATCH+OP*4+GG_DISP2STATIC] // Re-dispatch to static ins.

|.endifEssentially, the call to lj_dispatch_ins boils down to a executing trace_state(), where the main logic for tracing is found:

switch (J->state) {

case LJ_TRACE_START:

J->state = LJ_TRACE_RECORD;

trace_start(J);

lj_dispatch_update(J2G(J));

/* ... */

case LJ_TRACE_RECORD:

lj_record_ins(J); // emit IR code

/* ... */

case LJ_TRACE_END:

/* ... */

case LJ_TRACE_ASM:

/* ... */

}

As you can see above, the tracing proccess is comprised of multiple steps:

LJ_TRACE_START: Entrypoint for recording, responsible for setup of dispatchers, etc.LJ_TRACE_RECORD: Will record every instruction that is executed and emit the relevant IR code.LJ_TRACE_ASM: Convert the IR to raw assembly code.LJ_TRACE_END: Stopping the trace. We reach here in one of the following scenarios:- The recorder was trying to record an NYI instruction(will be described below)

- The code jumped back to the beginning of the loop

- Recursion

Generating IR Code

As we’ve seen above, the process of tracing is basically to call repeatedly to trace_state through the hooks of lj_vm_record which records the next bytecode that is about to be executed. In this process, we are converting the bytecode into a LuaJIT custom IR code.

Note: Lua IR code is an ‘SSA form IR’, which stands for Static single-assignment Intermediate Representation: You probablly asking yourself why would the LuaJIT engine need this extra layer. Why would we convert the bytecode → IR → assembly. Shouldn’t we just skip the IR step and convert bytecode to assembly directly? Well, the purpose behind SSA IR is to simplify/abstract the code in a way that optizimation algorithms will be able to analyze it better. Here’s a nice intro for the subject if you’d like to get a better background: Compiler Design Module 70 : Static Single Assignment IR

The LuaJIT engine emits the IR code during the recording phase using the logic in lj_record_ins, this function has lots conditions in it in order to cover a wide variety of instructions, but eventually, most of it boils down to one key macro: emitir() which adds a new IR instruction to the recording.

Each IR instruction is 64-bit long and has the following structure(IRIns):

16 16 8 8 8 8

+-------+-------+---+---+---+---+

| op1 | op2 | t | o | r | s |

+-------+-------+---+---+---+---+

| op12/i/gco32 | ot | prev | (alternative fields in union)

+-------+-------+---+---+---+---+

| TValue/gco64 | (2nd IR slot for 64 bit constants)

+---------------+-------+-------+

32 16 16

There are plenty of IR instructions, and I won’t go over each and every one of them because the LuaJIT wiki docs already did a decent job with that. However, I will go over one key instruction that I find important - the SLOAD instruction:

0001 int SLOAD #2 CI

0002 num SLOAD #1 T

The SLOAD instruction loads a variable from the Lua stack to the IR trace. It has two operands:

- op1: Stack slot to load. The Lua variable is referenced in a lexicographical order of definition. Meaning:

SLOAD #1will load the 1st local variable of the function,SLOAD #2will load the 2nd var, and so on.- Slot

#0is saved for the closure/frame base.

- op2: flags, this part is less documented in the LuaJIT wiki and also part of the reason I thought it’s worth writing it here:

- In the IR snippet above you can see letters like

CIandT, each letter is a flag and it stores additional information about the IR slot itself and what to do when loading. For example,Tis for Type check. The defenitions/‘modes’ ofSLOADcan be found at src/lj_ir.h#L221-L227

- In the IR snippet above you can see letters like

Another important thing to understand about SSA IR is that every instruction is an abstract representation of a new value/‘new version of a variable’, and each instruction can reference the result of another instruction. To make an example, let’s take the following lua code:

local result = 0

result = result + 200

result = result - 50

result = result + 50In an IR dump, it will look something like that, I added some comments so it will be easier to follow:

0002 num SLOAD #1 T // loading the 1st local variable.

0003 num ADD 0002 +200 // referencing the result of IR number `0002`, and adding 200

0004 num SUB 0003 +50 // referencing the result of IR number `0003` and subtracting 50

0005 num ADD 0004 +50 // referencing the result of IR number `0004` and adding 50Organization of the IR buffer

The IR is kept in an array of IRInss, this array(J→irbuf[]) has two parts:

- IR Constants, grows downwards.

- Non-constants(=IR Instructions), grows upwards.

The whole ‘upwards’ and ‘downwards’ concept might be confusing at first, but it’s actually very clever and includes some pointer magic underneath:

The following snippet shows the initialization of the IR buffer on the heap:

} else {

baseir = (IRIns *)lj_mem_realloc(J->L, NULL, 0, LJ_MIN_IRSZ*sizeof(IRIns)); // allocating buffer for the IR trace.

J->irbotlim = REF_BASE - LJ_MIN_IRSZ/4; // 8 slots for constants

J->irtoplim = J->irbotlim + LJ_MIN_IRSZ; // 24 slots for IR instructions

}

J->cur.ir = J->irbuf = baseir - J->irbotlim; // Biasing the pointer! The last line of the snippet is where the magic happens. We are subtracting from baseir(which is the original address of the IR buffer array) a very large value. As a result, J->irbuf[0] now pointing waaay back in memory to an invalid address.

In order to re-adjust the pointer and reach back to the IR buffer, we’ll have to index it as J→irbuf[. This will make us land somewhere in the middle of the buffer.REF_BIAS]

With all this pointer magic done, the IR array buffer now has a ‘butterfly’ shape, and it can grow downwards(lj_ir_growbot) and upwards(lj_ir_growtop):

<-- constants --\ /-- non-constants -->

~--+-----+-----+-----+-----+-----+-----+--~

|false|true |nil | | | |

~--+-----+-----+-----+-----+-----+-----+--~

^ &ir[REF_BIAS]

(ascii art credit: Vyacheslav Egorov’s slides)

This arrangement has some benefits, one of them is that it’s very deterministic: when iterating through the IR instructions, it is common to find that some of them are trying to reference another slot in the IR buffer. In order to determine whether the IR is a referring to a constant or non-constant, you just need to verify whether val < REF_BASE. In fact, this is exactly what irref_isk() is doing.

Guards

Every trace is linear, which means that one trace corresponds to one code path/execution flow. Let’s take an example Lua script below to demonstrate what it means:

function lol(n)

for i=0, 100, 1 do

if n > i then

print('n is greater than i')

else

print('n is less than than i')

end

end

end

lol(60)If we breakdown and visualize what happens in the 56th iteration(=when this loop becomes hot), this is how the recorder sees the loop:

The red paths are the paths that LuaJIT recorded, and the blue part is a branch that the interpreter never encountered while JITing this loop(because during the recording, i was below the 60 and above the tracing threshold). What it means is that: in the final assembly code, the else branch will never be present. What LuaJIT is doing in order to maintain consistency is inserting ‘Guarded Assertions’ in those missing branches. Guards are tiny assembly stubs that will bail out the JIT’ed trace and return the execution back to interpretation mode.

In IR, the technical term to describe an ‘opportunity’ to bail out from a compiled trace is called side-exit, or exit.

Guards are not just meant to enforce execution paths of if/else statements, they can be used to verify that the type of a certain variable is still the same/haven’t changed: imagine if you have a polymorphic code containing a hot loop, and it changes one of the variables types from string to int, then, entering the JITed loop again. This can cause some serious memory-corruption issues such as Type Confusion.

Note: Root traces have a default hotness threshold of 56 and side traces have a hotness threshold of 10.

The logic behind the linear tracing concept lays in the assumption that in most programs, a hotloop will most likely to take the same execution path.

Snapshots

The LuaJIT IR uses snapshots: Snapshots hold the execution state from the view of the bytecode interpreter at selected points in a trace. Every snapshot saves a specific bytecode execution state, which can later be restored during a trace exit. Snapshots are emitted into the IR stack(J→slot[]) and provide the link between the trace and the bytecode layer.

To inspect this yourself, you can print snapshot data using the -jdump=is option.

We’ll take the following Lua script, which is doing multiple changes to the result variable:

function add_sub()

local result = 0

for i=1, 100, 1 do -- trigger JIT/hotloop

result = result + 200

result = result - 50

result = result + 50

end

return result

end

print('result: ', add_sub())Below is the IR dump, with snapshots:

$ luajit -jdump=is tmp-scripts/mods.lua

---- TRACE 1 start mods.lua:3

---- TRACE 1 IR

.... SNAP #0 [ ---- ]

0001 int SLOAD #2 CI

0002 > num SLOAD #1 T

0003 num ADD 0002 +200

0004 num SUB 0003 +50

0005 + num ADD 0004 +50

0006 + int ADD 0001 +1

.... SNAP #1 [ ---- 0005 ]

0007 > int LE 0006 +100

.... SNAP #2 [ ---- 0005 0006 ---- ---- 0006 ]

0008 ------ LOOP ------------

0009 num ADD 0005 +200

0010 num SUB 0009 +50

0011 + num ADD 0010 +50

0012 + int ADD 0006 +1

.... SNAP #3 [ ---- 0011 ]

0013 > int LE 0012 +100

0014 int PHI 0006 0012

0015 num PHI 0005 0011

---- TRACE 1 stop -> loop

result: 20000

A quick breakdown of what we see here:

- Each snaphot (

SNAP) lists the modified stack slots and their values. The i-th value in the snapshot list represents the index of the IR that writes a value in slot number i.- For example, in

SNAP #2above, you can see that index 1 contains0005, it means that the latest change that was made to the 1st stack slot(loaded in0002→SLOAD #1) was in instruction number0005. - The additional

---- ---- 0006that was added to the end of the trace sometime can confuse, because we neverSLOADed vars in those indexes. These are temporary, internal variables created for looping purposes by the LuaJIT engine: the 1st is the limit, 2nd is the step, and the 3rd is a copy of i.

- For example, in

---indicates that the slot has not changed/is not written.- In some of the instructions, there’s a

>characters, this char indicating that this is a Guard. This Guard asserts some conditions, if the condition fails: the execution bails out/returns to the interpreter and the state is recovered using the snapshot data usingsnap_restoreval,lj_snap_restore.

The snapshot syntax can sometimes be confusing, It’s recommended to read this thread from the LuaJIT mailing list to get a better understanding.

NYI and Trace Stitching

NYI(Not Yet Implemented) is a category of instructions that cannot be traced.

When LuaJIT encounters an instruction that it can’t compile, like a coroutine function, or next(), the trace is aborted. That goes for LuaJIT 2.0; In version 2.1, ‘trace stitching’ was introduced, which enables LuaJIT to resume the trace recording after a NYI instruction is executed. The way this mechanism is implemented is as follows:

Let’s say we have a hotloop that contains a call to print() and the interpreter was about to execute the FUNCC instruction(this is an NYI) in order to invoke the print() method:

function foo()

for i=0, 100, 1 do

if 200 > i then -- 'ISGE 3 4', dummy condition to add complexity

print('200 is greater') -- 'FUNCC': triggers an exit+stitch

end -- 'JFORL 0 1'

end

end

foo()The following will get executed:

recff_nyi is triggered and starts a stitch → calls recff_stitch:

static void recff_stitch(jit_State *J)

{

ASMFunction cont = lj_cont_stitch;

lua_State *L = J->L;

TValue *base = L->base;

BCReg nslot = J->maxslot + 1 + LJ_FR2;

TValue *nframe = base + 1 + LJ_FR2;

const BCIns *pc = frame_pc(base-1);

TValue *pframe = frame_prevl(base-1);

/* Move func + args up in Lua stack and insert continuation. */

memmove(&base[1], &base[-1-LJ_FR2], sizeof(TValue)*nslot);

setframe_ftsz(nframe, ((char *)nframe - (char *)pframe) + FRAME_CONT);

setcont(base-LJ_FR2, cont);

setframe_pc(base, pc);

setnilV(base-1-LJ_FR2);

L->base += 2 + LJ_FR2;

L->top += 2 + LJ_FR2;

/*...*/

lj_record_stop(J, LJ_TRLINK_STITCH, 0); // stopping the traceThe way it performs the stitching is by inserting a ‘continuation frame’ with a callback pointing to lj_cont_stitch into the Lua stack and stopping the trace with a LJ_TRLINK_STITCH flag. The ‘continuation’ callback is placed on the stack in a way that allows the C function(print) to be executed, and then when it ends, the trace will continue(hence the name, ‘continuation’):

| // Stitch a new trace to the previous trace.

| mov [DISPATCH+DISPATCH_J(exitno)], RB

| mov L:RB, SAVE_L

| mov L:RB->base, BASE

| mov FCARG2, PC

| lea FCARG1, [DISPATCH+GG_DISP2J]

| mov aword [DISPATCH+DISPATCH_J(L)], L:RBa

| call extern lj_dispatch_stitch@8 // (jit_State *J, const BCIns *pc)

| mov BASE, L:RB->base

| jmp ->cont_nopThe call to lj_dispatch_stitch will start a new trace, which will eventually be linked(or, ‘stitched’) to the previous trace during the assembly generation phase.

The dump below demonstrates how the traces are splitted:

$ luajit -jdump=risAb tmp-scripts/foo.lua

...

200 is greater

200 is greater

---- TRACE 1 start foo.lua:2

0005 KSHORT 4 200

0006 ISGE 3 4

0007 JMP 4 => 0011

0008 GGET 4 0 ; "print"

0009 KSTR 5 1 ; "200 is greater"

0010 CALL 4 1 2

0000 . FUNCC ; print

---- TRACE 1 IR

.... SNAP #0 [ ---- ]

0001 rbp int SLOAD #1 CI

.... SNAP #1 [ ---- 0001 ---- ---- ---- ]

0002 > int LT 0001 +200

.... SNAP #2 [ ---- 0001 ---- ---- ---- ]

0003 rbx fun SLOAD #0 R

0004 rbx tab FLOAD 0003 func.env

0005 int FLOAD 0004 tab.hmask

0006 > int EQ 0005 +63

0007 rbx p32 FLOAD 0004 tab.node

0008 > p32 HREFK 0007 "print" @21

0009 > fun HLOAD 0008

0010 > fun EQ 0009 print

0011 xmm7 num CONV 0001 num.int

.... SNAP #3 [ ---- 0011 ---- ---- ---- trace: 0x412fbc28 [0x000032df] print|"200 is greater"~ ]

---- TRACE 1 stop -> stitch

200 is greater

200 is greater

---- TRACE 2 start 1/stitch foo.lua:4

0011 JFORL 0 1

---- TRACE 2 IR

.... SNAP #0 [ ---- ]

0001 xmm7 num SLOAD #1 I

0002 xmm7 num ADD 0001 +1

.... SNAP #1 [ ---- ]

0003 > num LE 0002 +100

.... SNAP #2 [ ---- 0002 ---- ---- 0002 ]

---- TRACE 2 stop -> 1

200 is greater

...

If you’re still new to this, I’d recomendded inspect the link types between the traces in luajit.me, I found it as a great visualization tool.

Step 3: JIT-compilation

In this step, the IR is transformed into a raw assembly opcodes using lj_asm_trace(). Suprisingly enough, the loop that performs this conversion is iterating through the IR code backwards. It’s done backwards for optimization purposes and lays on the assumption that ‘liveness flows backwards’, i.e: If you can’t find a reference for a variable(IR slot) earlier in the loop(!ra_used(ir)), it’s considered as a ‘dead code’ and shouldn’t be compiled. Clever!

The following snippet demonsrates the assembly generation loop:

/* Assemble a trace in linear backwards order. */

for (as->curins--; as->curins > as->stopins; as->curins--) {

IRIns *ir = IR(as->curins);

lua_assert(!(LJ_32 && irt_isint64(ir->t))); /* Handled by SPLIT. */

if (!ra_used(ir) && !ir_sideeff(ir) && (as->flags & JIT_F_OPT_DCE))

continue; /* Dead-code elimination can be soooo easy. */

if (irt_isguard(ir->t))

asm_snap_prep(as);

RA_DBG_REF();

checkmclim(as);

asm_ir(as, ir); // <------ Spitting out ASM instructions

}If you’re interested reading more about Liveness Analysis try: Wikipedia: Live-Variable Analysis, Paper: University of Linz, Practical CS, Youtube: Liveness Analysis, Blog: Reverse Linear Scan Allocation.

The J→trace[] array containing pointers to the JIT’ed traces. And eventually, the pointer to the machine code is saved at the J→trace[idx]→mcode member:

gef➤ x/5i (*(GCtrace*)(J->trace[2]->gcptr32))->mcode

0x55557f65fecb <TRACE_2>: mov DWORD PTR ds:0x40000410,0x2

0x55557f65fed6 <TRACE_2+11>: movsd xmm6,QWORD PTR ds:0x4001b9e8

0x55557f65fedf <TRACE_2+20>: movsd xmm5,QWORD PTR ds:0x4001b9d8

0x55557f65fee8 <TRACE_2+29>: movsd xmm7,QWORD PTR [rdx+0x8]

0x55557f65feed <TRACE_2+34>: addsd xmm7,xmm5

...

gef➤ x/5i (*(GCtrace*)(J->trace[1]->gcptr32))->mcode

0x55557f65ff11 <TRACE_1>: mov DWORD PTR ds:0x40000410,0x1

0x55557f65ff1c <TRACE_1+11>: cvttsd2si ebp,QWORD PTR [rdx+0x8]

0x55557f65ff21 <TRACE_1+16>: cmp DWORD PTR [rdx+0x4],0xfffeffff

0x55557f65ff28 <TRACE_1+23>: jae 0x55557f650010

0x55557f65ff2e <TRACE_1+29>: movsd xmm6,QWORD PTR [rdx]

...

Step 4: Executing the JIT’ed code

In this phase, we need to take the generated assembly trace and create a link between the Lua VM to the JITed code. To achieve this, trace_stop is called and the Lua opcode is hot-patched(=the Lua instruction where the trace started):

- The instruction is patched to be the JIT version of itself(i.e:

FORL→ becomesJFORL). - The trace number is stored in operand D of the Lua instruction.

switch (op) {

case BC_FORL:

setbc_op(pc+bc_j(J->cur.startins), BC_JFORI); /* Patch FORI, too. */

/* fallthrough */

case BC_LOOP:

case BC_ITERL:

case BC_FUNCF:

/* Patch bytecode of starting instruction in root trace. */

setbc_op(pc, (int)op+(int)BC_JLOOP-(int)BC_LOOP);

setbc_d(pc, traceno);

addroot:

/* Add to root trace chain in prototype. */

J->cur.nextroot = pt->trace;

pt->trace = (TraceNo1)traceno;

break;

case BC_RET:

case BC_RET0:

case BC_RET1:

*pc = BCINS_AD(BC_JLOOP, J->cur.snap[0].nslots, traceno);

goto addroot;

case BC_JMP:

/* Patch exit branch in parent to side trace entry. */

lua_assert(J->parent != 0 && J->cur.root != 0);

lj_asm_patchexit(J, traceref(J, J->parent), J->exitno, J->cur.mcode);

/* Avoid compiling a side trace twice (stack resizing uses parent exit). */

traceref(J, J->parent)->snap[J->exitno].count = SNAPCOUNT_DONE;

/* Add to side trace chain in root trace. */

{

GCtrace *root = traceref(J, J->cur.root);

root->nchild++;

J->cur.nextside = root->nextside;

root->nextside = (TraceNo1)traceno;

}

break;

case BC_CALLM:

case BC_CALL:

case BC_ITERC:

/* Trace stitching: patch link of previous trace. */

traceref(J, J->exitno)->link = traceno;

break;I created the following diagram which supposed to summarise the whole flow on a higher level:

What’s next

Even though this post is intended to be a (somewhat) deep walkthrough/source code tour, some of the stuff were described on a higher-level because I just found myself digging too deep into the internals of LuaJIT for a single blogpost, which can make it too long. So instead, I’ll list here some of the topics I that I felt needed more attention, feel free to DM me your vote on what type of content would you like to read:

- The IR Stack & Snapshots: How snapshots are compressed, recovered, etc.

- Garbage Collection.

- JIT Optimizations:

- DCE: Dead-code Elimination. Liveness Analysis.

- CSE: Common Subexpression Elimination.

- DSE: Dead-store Elimination.

- FWD: Store-to-load forwarding, Load-to-store forwarding.

- NARROW: Narrowing of numbers to integers (double to int32_t).

- SPLIT: Split 64 bit IR instructions into 32 bit IR instructions.

- ABCelim: Bound check elimination.

- SINK: Allocation Sinking and Store Sinking.

- The Fast Functions (

FF_*) interface. - The VMEvent ‘ecosystem’(

lj_vmevent_send,setvmstateand friends): how it works, purpose.

Thanks for reading! I hope it was informative. See you on part 3, where we’ll do some JIT pwning :^)